Analog Neural Networks

September 19, 2022

Computing machines existed before the

electronic analog computers of the early

20th century and the

digital computers of the late 20th century and beyond. As evidenced by the attempt of

Charles Babbage (1791-1871) in the creation of his

Analytical Engine in the early

19th century,

mechanical systems were one means of computation available to earlier

generations. One interesting mechanical computing device was the

wheel-on-disk integrator, invented by B.H. Hermann in 1814.[1]

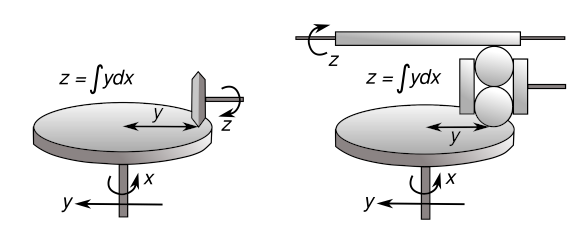

Two versions of the mechanical disk integrator. Left, wheel-on-disk integrator, invented by B.H. Hermann in 1814 and used by Vannevar Bush (1890-1974) in the MIT differential analyzer.[2] Right, a ball-on-disk version invented by Hannibal Ford (1877–1955) in 1919.[3] The mechanical principal is that the wheel or ball will spin faster at the outer edge of the disk. (Created by the author using Inkscape. Also uploaded to Wikimedia Commons. Click for larger image.)

The wheel-on-disk integrator was used by

Vannevar Bush (1890-1974) in the

MIT differential analyzer. Differential analyzers were impressive enough to be included in two

science fiction films; namely,

Earth vs. the Flying Saucers (1956,

Fred F. Sears, Director)[4] and

When Worlds Collide (1951,

Rudolph Maté, Director)[5]. As electronics developed, especially stable

operational amplifiers such as the

Philbrick K2-W, analog computation was transferred from the mechanical to the electronic realm. An

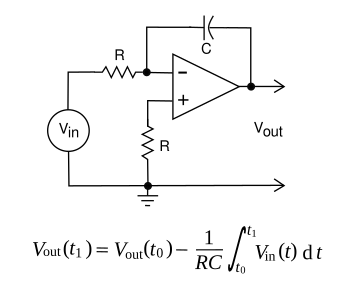

operational amplifier integrator is shown in the figure.

An operational amplifier integrator.

In operation, the capacitor is discharged to make the output voltage zero at time zero. Having the same value resistor in the non-inverting input eliminates drifting caused by input bias currents.

(Created using Inkscape.)

I'm a member of the

baby boomer generation; so, digital computing wasn't part of my

undergraduate physics education. Instead, I had several

laboratory exercises in

analog computation, one of which was the

calculation of the

trajectory of

projectiles launched on

Jupiter and on

Earth. The results of the calculation, as displayed on an

x-y chart recorder, were as you would expect from the 2.4 times difference in

surface gravity between the

planets.

Deep neural networks are the present

darlings of

computer science, having enabled such things as

speech recognition and its companion

technology,

natural language processing,

image recognition, and

e-commerce recommender systems. These are presently implemented using considerable

computer hardware and at a substantial

energy cost. It's estimated that

global data centers presently consume about 200

terawatt-hours annually, which is the

electrical usage of 16 million

United States households (11,000

kilowatt hours each per year). Is there a better way?

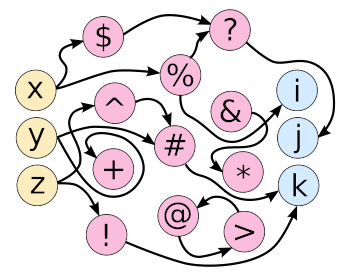

A decade ago, I created this Feral Network graphic as a counterpoint to neural networks.

Feel free to use this image for non-commercial purposes, such as a personal coffee mug or T-shirt.

(Created by the author using Inkscape. Click for larger image.)

The

human brain does wonderful things using an

energy budget of only twenty

watts (

Architects once

estimated an

auditorium's cooling requirement by considering that each person is the equivalent of a 75 watt

incandescent light bulb. Our

rampant rotundness has scaled this up to 100 watts).

Neurons and

synapses in the human brain operate through

chemical action mediated by

action potentials of about 100

millivolts that allow

millisecond processing.[6]

Artificial solid state neurons are not limited by such a

voltage constraint, and they can be

fabricated at the

nanoscale at a size that's a thousand times smaller than their

biological counterparts.[6]

Researchers at the

MIT-IBM Watson AI Lab of the

Massachusetts Institute of Technology (Cambridge, Massachusetts) have developed

prototype nanoscale high voltage

protonic programmable resistors that are 10,000 times faster than biological synapses, and these could form the basis for an analog neural network.[6-7] Such resistors would be a key building block for an analog deep learning neural network, just as

transistors are for the digital version.[7]

The

energy-efficient shuttling and

intercalation of

protons causing the

resistance modulation are on a

nanosecond timescale at

room temperature.[6] The resistors are compatible with standard

silicon processing, and they have

symmetric,

linear, and

reversible modulation characteristics with a twenty-fold

dynamic range of

conductance states, thereby exceeding all performance characteristics of their biological counterparts.[6]

Says senior

author of the

paper describing this research,

Bilge Yildiz, a

professor of

Materials Science and Engineering at MIT,

"The working mechanism of the device is electrochemical insertion of the smallest ion, the proton, into an insulating oxide to modulate its electronic conductivity. Because we are working with very thin devices, we could accelerate the motion of this ion by using a strong electric field, and push these ionic devices to the nanosecond operation regime."[7]

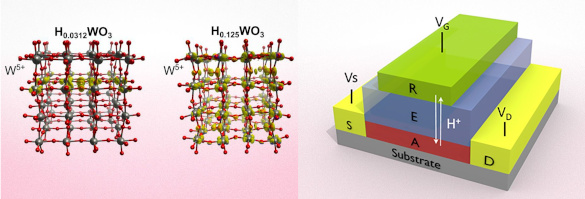

Details of the MIT artificial synapse. Left and center are proton-loaded structures of tungsten trioxide (WO3), a candidate synapse material investigated by the MIT research team in a 2020 study.[8] On the right is an artificial synapse in which ions of hydrogen (protons, shown as H+) can migrate back and forth between a hydrogen reservoir material (R) and tungsten trioxide (A) by passing through an electrolyte layer (E). The movement of the ions is controlled by the polarity and voltage applied through gold electrodes (S and D), and this changes the electrical resistance of the device. (Left image and right image courtesy of MIT and distributed under the Creative Commons Attribution Non-Commercial No Derivatives license. Click for larger image.)

The artificial synapses operate at voltages a hundred times higher than those for biological synapse, and this enables faster ionic conduction.[7] Lead author and MIT

postdoctoral researcher,

Murat Onen, explains that this increase in speed will lead to an advancement in neural networks. "Once you have an analog processor, you will no longer be

training networks everyone else is working on. You will be training networks with unprecedented

complexities that no one else can afford to, and therefore vastly outperform them all. In other words, this is not a faster

car, this is a

spacecraft."[7]

Phosphorus-

doped silica,

inorganic phosphosilicate glass, is one material that the MIT team has used for the resistors, and this enables ultrafast proton movement through a multitude of nanometer-sized

pores that provide paths for proton

diffusion at high voltages and has been found to be

robust for millions of cycles.[7] Other studied materials are intercalation compounds similar to those found in

lithium-ion batteries.[8] One of these is

tungsten trioxide (WO3), as shown in the figure above.[8] Just after finishing this article, I noticed that the ever

educational Sabine Hossenfelder (b. 1976) posted a

YouTube video relevant to this topic.[9]

References:

- A. Ben Clymer, "The Mechanical Analog Computers of Hannibal Ford and William Newell," IEEE Annals of the History of Computing, vol. 15, no. 2 (1993), pp. 19-34, https://doi.org/10.1109/85.207741. Avaiable as PDF file here.

- V. Bush and H. Hazen, "The differential analyzer: a new machine for solving differential equations," Journal of the Franklin Institute, vol. 212, no. 4 (October, 1931), pp. 447-488https://doi.org/10.1016/S0016-0032(31)90616-9. Avaiable as PDF file here.

- H.C. Ford, "Mechanical movement," U.S. Patent No. 1,317,915, October 7, 1919 (via Google Patents.

- Earth vs. the Flying Saucers (1956, Fred F. Sears, Director)

- When Worlds Collide (1951, Rudolph Maté, Director)

- Murat Onen, Nicolas Emond, Baoming Wang, Difei Zhang, Frances M. Ros, Ju Li, Bilge Yildiz and Jesús A. del Alamo, "Nanosecond protonic programmable resistors for analog deep learning," Science, vol. 377, no. 6605 (July 28, 2022), pp. 539-543, DOI: 10.1126/science.abp8064.

- Adam Zewe, "New hardware offers faster computation for artificial intelligence, with much less energy," MIT Press Release, July 28, 2022.

- David L. Chandler, "Engineers design a device that operates like a brain synapse," MIT Press Release, June 19, 2020 .

- Sabine Hossenfelder, "What's the difference between a brain and a computer?" YouTube Video, July 30, 2022.

Linked Keywords: Computer; computing machine; electronic; analog computer; 20th century; digital computer; Charles Babbage (1791-1871); Analytical Engine; 19th century; mechanics; mechanical system; generation; wheel-on-disk integrator; mechanical disk integrator; Vannevar Bush (1890-1974); Massachusetts Institute of Technology; MIT; differential analyzer; Hannibal Ford (1877–1955); wheel; ball; edge (geometry); disk (mathematics); Wikimedia Commons; science fiction film; Earth vs. the Flying Saucers; Fred F. Sears; When Worlds Collide (1951 film); Rudolph Maté; operational amplifier; George A. Philbrick; K2-W; operational amplifier integrator; capacitor; charge cycle; discharged; voltage; resistor; non-inverting input; input bias current; Inkscape; baby boomer generation; undergraduate education; physics; education; laboratory; mental exercise; calculation; trajectory; projectile; Jupiter; Earth; x-y chart recorder; surface gravity; planet; deep neural network; darling; computer science; speech recognition; technology; natural language processing; computer vision; image recognition; e-commerce; recommender system; computer hardware; energy economics; energy cost; world; global; data center; terawatt-hours; year; annually; electricity; electrical; United States; household; kilowatt hours; feral network; decade; graphic; counterpoint; neural network; commerce; non-commercial; coffee mug; T-shirt; human brain; energy budget; watt; architect; approximation; estimate; auditorium; air conditioning; cooling requirement; incandescent light bulb; obesity; rampant rotundness; neuron; synapse; chemistry; chemical; chemical potential; action potential; millivolt; millisecond; chemical process; processing; artificiality; artificial; solid-state chemistry; voltage; microfabrication; fabricate; nanoscopic scale; nanoscale; biology; biological; research; researcher; MIT-IBM Watson AI Lab; Massachusetts Institute of Technology (Cambridge, Massachusetts); prototype; proton; protonic; programmable matter; resistor; transistor; efficient energy use; energy-efficient; intercalation (chemistry); electrical resistance; modulation; nanosecond; room temperaturev (scientific use); silicon; microfabrication; processing; symmetry; symmetric; linearity; linear; reversible process (thermodynamics); dynamic range; electrical conductance; author; scientific literature; paper; Bilge Yildiz; professor; Materials Science and Engineering at MIT; electrochemistry; electrochemical; ion; insulator (electricity); insulating; oxide; acceleration; accelerate; electric field; tungsten trioxide (WO3); material; ion; hydrogen; electrolyte; electrical polarity; gold; electrode; Creative Commons Attribution Non-Commercial No Derivatives license; postdoctoral research; Murat Onen; training, validation, and test data sets; complexity; automobile; car; spacecraft; phosphorus; doping (semiconductor); doped; silicon dioxide; silica; inorganic compound; phosphosilicate glass; nanopore; diffusion; resilience; robust; lithium-ion battery; education; educational; Sabine Hossenfelder (b. 1976); YouTube video; H.C. Ford, "Mechanical movement," U.S. Patent No. 1,317,915, October 7, 1919.