Ten Rules of Statistics

August 1, 2016

There's a

statistic (I suppose) that any article about

statistics will likely start with the observation that "

there are three kinds of lies: lies, damned lies, and statistics." This saying, which can be traced back to 1891, was popularized by

Mark Twain (1835-1910), but its origin is unknown. I wrote about one type of statistical lie in an

earlier article (Hacking the p-Value, May 4, 2015).

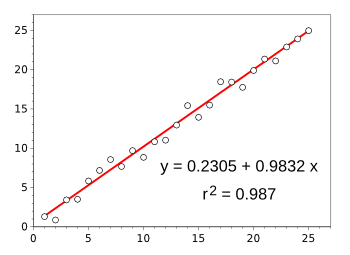

Statistics are an important part of

science. Very low level statistical

analysis is used to derive a best value for a

measured quantity found through several

experimental trials, and for assessing the quality of a

curve fit to

data (called "

goodness of fit"). Most curve-fitting

programs give this as the

coefficient of determination, called r-squared in a

simple linear regression (see graph).

Regression fit of a straight line to noisy data.

In this example, the noise is just a few percent of full scale, so r2 is nearly 99%.

(Analysis using Gnumeric.)

The

physical science and

mathematics preprint website,

arXiv, publishes statistics papers in several categories, as follow:

stat.AP - Applications: Biology, Education, Epidemiology, Engineering, Environmental Sciences, Medical Research, Physical Sciences, Quality Control, Social Sciences.

stat.CO - Computation: Algorithms, Simulation, Visualization.

stat.ML - Machine Learning: Classification, Graphical Models, High Dimensional Inference.

stat.ME - Methodology: Design, Surveys, Model Selection, Multiple Testing, Multivariate Methods, Signal Processing and Image Processing, Time Series, Smoothing, Spatial Statistics, Survival Analysis, Nonparametric and Semiparametric Methods.

stat.OT - Other Statistics: Work in statistics that does not fit into the other stat classifications.

stat.TH - Statistics Theory: Asymptotics, Bayesian Inference, Decision Theory, Estimation, Foundations, Inference, Testing.

Statistical analysis is especially important in the area of experimental

high energy physics, where some types of

elementary particles are few and far in between. So as to not

fool themselves too often, elementary particle

physicists have set a certainty of five

standard deviations (5-σ) as the threshold of

truth. This sets a

p-value, the likelihood that the result of an experiment is not as predicted by the

hypothesis, of about 0.000028%. Few would argue about such a standard.

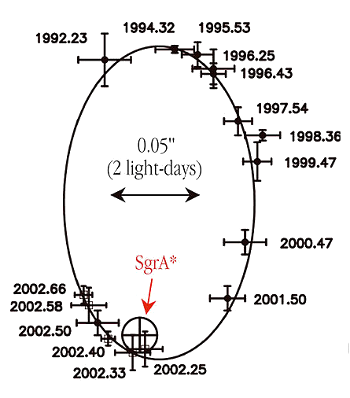

Statistics in astronomy.

Orbit of star S2 around Sagittarius A* showing error bars of position measurement.

The elliptical orbit is a fit to these data.

(Portion of a European Southern Observatory image, via Wikimedia Commons.)

A recent

editorial in

PLoS Computational Biology by

authors from

Carnegie Mellon University (Pittsburgh, Pennsylvania),

Johns Hopkins University (Baltimore, Maryland),

North Carolina State University (Raleigh, North Carolina),

Harvard University (Cambridge, Massachusetts), the

University of California Berkeley (Berkeley, California), and the

University of Toronto (Toronto, Ontario) offers ten rules to remove most of the "lies," and all of the "damned lies," from statistics.[1-2]

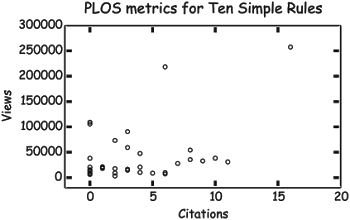

Their article follows in a long tradition of

"Ten Rules" articles on PLoS, of which there are about sixty. There's even a PLoS article entitled, "Ten Simple Rules for Writing a PLoS Ten Simple Rules Article."[3] These articles seem to evoke considerable interest, as the following

graph indicates.

Citation statistics for Ten Simple Rules articles.

It's seen that they're viewed much more often than cited.

(Fig. 3 of Ref. 3.[3]

As the authors write in their "Ten Simple Rules for Effective Statistical Practice" editorial, "

Statisticians are not shy about reminding

administrators that statistical science has an impact on nearly every part of almost all organizations." This particular article has generated more than 43,000 views, a statistic that puts it in the top twenty of these "Ten Rules" articles. Says

Michael J. Tarr, head of

CMU's Department of Psychology,

"The sciences, and, particular the fields of psychology and neurobiology, have come under increasing scrutiny in recent years for sometimes poor statistical practices... Straightforward and understandable guidelines as articulated by (Robert E.) Kass and colleagues will help tremendously in reminding both students and faculty as to the importance of statistically well-grounded research. Their paper is an instant 'must-read' for anyone who cares about good and reproducible science."[2]

The following is a summary of the "Ten Simple Rules for Effective Statistical Practice."[1-2]

Statistical Methods Should Enable Data to Answer Scientific Questions

While scientists are skilled at collecting data, they are typically not skilled in the many ways that

information can be extracted from the data. The statistician authors of these rules, not unexpectedly, propose that statisticians be consulted at all stages of investigation.

Signals Always Come With Noise

Noise is present in all experimental data, so it's important to accurately express your uncertainty (for example, the error bars in the orbit figure, above), and to identify sources of

systematic error.

Plan Ahead, Really Ahead

It's always important to design your experiment carefully. You want to change the

variables appropriately, and you also want to simplify your data analysis when it's over.

Worry About Data Quality

Computer programmers are not the only ones who face the "

garbage in -> garbage out" problem.

Automated data acquisition, and

laboratory instruments that perform

signal filtering and other

preprocessing, might shade your data in unknown ways.

Statistical Analysis Is More Than a Set of Computations

Statistical

software may speed analysis, but it's important to do the types of statistical analysis appropriate to your experiment. Are you fitting to a

straight line because that's what

theory predicts, or does theory predict a different type of curve you should be fitting to?

Keep it Simple

As I learned in the

design of industrial experiments (see my earlier article, Tea Party Technologists, November 18, 2011), most experiments can be considerably simplified, since the extra data is, in fact, redundant. One

chemist I knew didn't need to fit his data points to a curve, since his data points were so close together that

they were the curve. All that data-taking was wasted effort.

Provide Assessments of Variability

When your

hypothesis is proven with a

weight change of the order of

milligrams, and your available

analytical balance measures to 10

micrograms, you know that your measurement error is important. In your published paper, it's important to calculate how this uncertainty propagates to your final result.

Check Your Assumptions

Since you're a

materials scientist, and not an

astronomer, why did you think that it was necessary to do your experiments on the night of the

full moon? The argument that "we've always done things that way" doesn't carry much weight in scientific circles.

When Possible, Replicate!

Replication is at the heart of scientific investigation. It is only through replication that your experiments are validated; but, for this to be possible, replicant experiments must be done in the same way. Science advances, also, when experiments are done with slight changes in variables. Statistics will identify how much a variable needs to be changed to expect a different experimental outcome.

Make Your Analysis Reproducible

Data is one thing, but experimental results are presented in aggregate form. When data are shared, details on the statistical analysis should be shared, also, so that the tables, figures and statistical inferences in your publication can be reproduced exactly.

Most of the above is done routinely by most senior scientists, and they will require as much from their junior team members.

Funding for the authors of the "Ten Simple Rules for Effective Statistical Practice" came from the

National Institutes of Health, the

Natural Sciences and Engineering Research Council Council of Canada, and the

National Science Foundation.[1]

An example of statistics being used to sensationalize a scientific study.

Punch line (punch panel?) of a cartoon from Randall Munroe's xkcd Comics, licensed under a Creative Commons Attribution-NonCommercial 2.5 License.

(Full cartoon on the xkcd web site.)

![]()

References:

- Robert E. Kass, Brian S. Caffo, Marie Davidian, Xiao-Li Meng, Bin Yu, and Nancy Reid, "Editorial - Ten Simple Rules for Effective Statistical Practice," PLoS Comput. Biol., vol. 12, no. 6 (June 9, 2016), Article no. e1004961, doi:10.1371/journal.pcbi.1004961. This is an open access publication with a PDF file available here.

- Shilo Rea, "Kass Co-Authors 10 Simple Rules To Use Statistics Effectively," Carnegie Mellon University Press Release, June 20, 2016.

- Harriet Dashnow, Andrew Lonsdale, and Philip E. Bourne, "Ten Simple Rules for Writing a PLOS Ten Simple Rules Article," PLoS Comput. Biol., vol. 10, no. 10 (October 23, 2014), Article no. e1003858, doi:10.1371/journal.pcbi.1003858.

Permanent Link to this article

Linked Keywords: Statistic; statistics; there are three kinds of lies: lies, damned lies, and statistics; Mark Twain (1835-1910); p-hacking; science; data analysis; measurement; measure; experiment; experimental; curve fitting; curve fit; data; goodness of fit; computer program; coefficient of determination; simple linear regression; Gnumeric; physical science; mathematics; preprint; website; arXiv; stat.AP - Applications; Biology; Education; Epidemiology; Engineering; Environmental Sciences; Medical Research; Physical Sciences; Quality Control; Social Sciences; stat.CO - Computation; Algorithms; Simulation; Visualization; stat.ML - Machine Learning; Classification; Graphical Models; High Dimensional Inference; stat.ME - Methodology; Design; Surveys; Model Selection; Multiple Testing; Multivariate Methods; Signal Processing; Image Processing; Time Series; Smoothing; Spatial Statistics; Survival Analysis; Nonparametric; Semiparametric Methods; stat.OT - Other Statistics; stat.TH - Statistics Theory; Asymptotics; Bayesian Inference; Decision Theory; Estimation; Foundations of Statistics; Inference; Statistical Hypothesis Testing; high energy physics; elementary particles; deception; physicist; standard deviation; truth; p-value; hypothesis; astronomy; orbit; star; Sagittarius A*; error bar; position; measurement; ellipse; elliptical; data; European Southern Observatory; Wikimedia Commons; editorial; PLoS Computational Biology; author; Carnegie Mellon University (Pittsburgh, Pennsylvania); Johns Hopkins University (Baltimore, Maryland); North Carolina State University (Raleigh, North Carolina); Harvard University (Cambridge, Massachusetts); University of California Berkeley (Berkeley, California); University of Toronto (Toronto, Ontario); "Ten Rules" articles on PLoS; Cartesian coordinate system; graph; citation; statistician; administrator; Michael J. Tarr; CMU's Department of Psychology; science; psychology; neuroscience; neurobiology; Robert E. Kass; collaboration; colleague; undergraduate education; student; faculty; research; academic publishing; paper; reproducibility; reproducible; information; statistical noise"; systematic error; variable; computer programmer; garbage in -> garbage out; automation; automated; data acquisition; laboratory instrument; digital filter; signal filtering; preprocessor; preprocessing; software; straight line; theory; design of industrial experiments; chemist; weight; milligram; analytical balance; microgram; materials scientist; astronomer; full moon; replication; funding of science; National Institutes of Health; Natural Sciences and Engineering Research Council Council of Canada; National Science Foundation; punch line; cartoon; Randall Munroe; xkcd Comics; Creative Commons Attribution-NonCommercial 2.5 License; xkcd 882.