Koomey's Law

October 20, 2011

If you're still doing your computing with a

Comptometer, then you likely don't have access to this blog. You would also be one of the few people who have never heard of

Moore's law. This law was formulated in 1965 by

Gordon E. Moore, who was at that time the Director of

Fairchild Semiconductor's Research and Development Laboratories and subsequently a co-founder of

Intel Corporation.

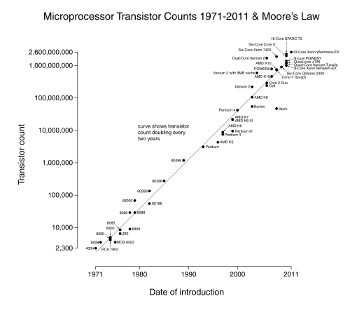

Moore's Law is simply stated: The number of

transistors in

integrated circuits doubles every two years.[1] Although Moore might have inadvertently codified a

roadmap for the

integrated circuit manufacturers, thereby making his statement a

self-fulfilling prophecy, his law has held true from 1965 to the present day (see figure).

Moore's Law.

Click for larger view.

(Image by W.G. Simon, via Wikimedia Commons).

Computing, of course, involves not just

hardware, but

software, too. There's a companion software law to Moore's hardware law called

Wirth's Law. The law is named after the preeminent computer scientist,

Niklaus Wirth, who gave us the

Pascal and

Modula-2 programming languages. Some of my first major modeling programs were written in Pascal for a

Digital Equipment Corporation VAX computer.

Wirth's law is also simply stated:

Software bloat in successive generations of computer software offsets the hardware performance advantages of Moore's Law. Bloat includes the unnecessary features of programs (such as

word processing programs) that are never used, and the poor programming habits of software developers who know that

memory and

CPU cycles are cheap.

It's been estimated that commercial software runs 50% slower every eighteen months. I, for one, rarely use

malloc() in my C programs, so I offend as much as any other. Wirth's Law is known also by other names, since its truth is so apparent that it's often restated.

If doubling transistors in an integrated circuit didn't come with a proportionate decrease in their

power requirement, there would be major problems. I mentioned the energy cost of

supercomputing in a

previous article (Special K, June 23, 2011).

The

fastest computer in the world, at least for the present, is the

K Computer of the

RIKEN Advanced Institute for Computational Science,

Kobe, Japan The K-Computer is rated at eight

petaflops, or the equivalent performance of about a million

desktop computers.

The K Computer was designed to be

energy-efficient, but it still consumes 9.89

megawatts! Energy consumption is becoming an important factor in supercomputing, since the average power consumption of the top ten supercomputers is 4.3 megawatts, a considerable jump from the 3.2 megawatts of the top ten supercomputers just six months prior.[2]

The improvement in the energy efficiency of computer circuits is described by

Koomey's Law, which states that the number of computations per unit energy has doubled about every 18 months. This trend has been apparent since the 1950s, but it was first stated as a "law" by

Jonathan Koomey,

Stephen Berard,

Marla Sanchez and

Henry Wong in a paper published earlier this year.[3]

The lead author of the paper, Jonathan Koomey, is a consulting professor of

civil and environmental engineering at

Stanford University. His coauthors are from

Microsoft (Stephen Berard),

Carnegie Mellon University (Marla Sanchez) and

Intel (Henry Wong).[4] Their data go back all the way to

ENIAC, the Electronic Numerical Integrator and Computer, built from

vacuum tubes in 1946.

ENIAC was capable of just a few hundred calculations per second (it could multiply two, ten digit numbers 357 times per second), and it required 150

kilowatts of electrical power to operate.[4] That works out to about a tenth watt per computation.

Quite interesting is the fact that the eighteen month cycle persisted through the vacuum tube days and into the transistorized computing. Koomey, quoted in

Technology Review, says that "This is a fundamental characteristic of information technology that uses electrons for switching... It's not just a function of the components on a chip."[4]

In 1985,

physics Nobel Laureate,

Richard Feynman, who would calculate anything, just for the fun of it, predicted that computation efficiency could increase by a factor of 10

11 from what was achieved that year. Koomey's Law predicts a doubling every 1.57 year, so the 24 years from Feynman's prediction to 2009 would give a factor of 2

15.29 = 40,063. This is remarkable agreement to the actual factor of about 40,000.[4]

One Internet commentator did a calculation based on Feynman's limit and the doubling every eighteen months. He found that we will reach a fundamental limit in 2043.

What is the smallest energy needed for a single bit computation? As usual,

thermodynamics gives us an answer. In 1961,

IBM Fellow,

Rolf Landauer, reasoned that the energy cost of computation wasn't in the computation itself, but in clearing the result of the previous computation to make room for the next result.[5]

Because of an

entropy increase, this operation has an energy cost of

kT ln(2)

where k is

Boltzmann's constant, and T is the absolute temperature. The natural logarithm of two follows from the binary nature of the operation. At room temperature,

kT is 4.11 x 10

−21 J.

References:

- Gordon E. Moore, "Cramming more components onto integrated circuits," Electronics, vol. 38, no. 8 (April 19, 1965), PDF File compliments of Intel Corporation.

- Erich Strohmaier, "Japan Reclaims Top Ranking on Latest TOP500 List of World's Supercomputers," TOP500 Press Release, June 16, 2011. .

- Jonathan Koomey, Stephen Berard, Marla Sanchez and Henry Wong, "Implications of Historical Trends in the Electrical Efficiency of Computing," IEEE Annals of the History of Computing, vol. 33, no. 3 (July-September 2011), pp. 46-54.

- Kate Greene, "A New and Improved Moore's Law," Technology Review, September 12, 2011.

- R. Landauer, "Irreversibility and heat generation in the computing process," IBM Journal of Research and Development, vol. 5, no. 3 (July 1961), pp. 183-191. A PDF copy is available, here.

Permanent Link to this article

Linked Keywords: Comptometer; Moore's law; Gordon E. Moore; Fairchild Semiconductor; Research and Development; Laboratory; Intel Corporation; transistor; integrated circuit; technology roadmap; Semiconductor Industry Association; self-fulfilling prophecy; computing; hardware; software; Wirth's Law; Niklaus Wirth; Pascal; Modula-2; Digital Equipment Corporation; VAX computer; software bloat; word processing program; memory; CPU cycle; malloc(); electric power; supercomputing; fastest computer; K Computer; RIKEN Advanced Institute for Computational Science; Kobe, Japan; petaflop; desktop computer; energy-efficient; megawatt; Koomey's Law; Jonathan Koomey; Stephen Berard; Marla Sanchez; Henry Wong; civil and environmental engineering; Stanford University; Microsoft; Carnegie Mellon University; Intel; ENIAC; vacuum tube; kilowatt; Technology Review; physics Nobel Laureate; Richard Feynman; thermodynamics; IBM Fellow; Rolf Landauer; entropy; Boltzmann's constant; kT; Joule.